Introduction

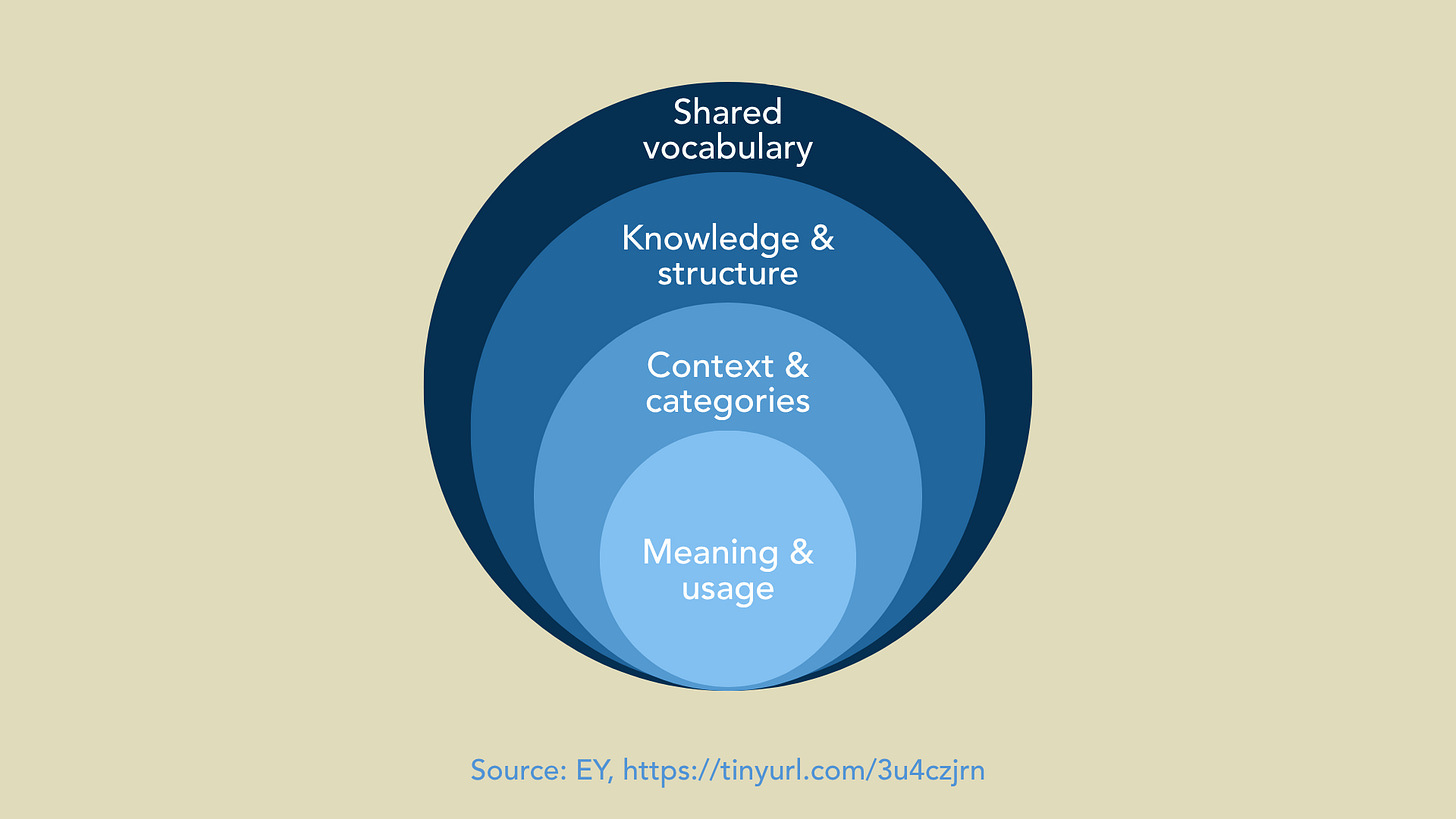

As AI systems move from narrow, task‑specific assistants, such as chatbots, to general purpose partners for all things digital, the quality and precision of the “context” they’re given has become the single biggest determinant of whether they perform reliably or hallucinate spectacularly. Context in and of itself, relative to LLMs, goes beyond simple prompt tweaking. Context is optimized when ontologies and knowledge graphs explicitly structure context as encoded relationships and meaning, to surface disambiguated facts, to ensure the AI always sees the right information at the right moment.

Building a robust context engineering practice means treating context as an intentional arrangement for domain–specific information and knowledge, so that AI can synthesize responses reliably.

Structured, machine readable knowledge provides context and clarity for machines. The ontology has emerged as the gold standard for context engineering, celebrated for ontology’s ability to provide clear context and meaning for concepts. However, not all ontologies are created equal. By leveraging an upper level, lightweight ontology like Simple Knowledge Organization System or SKOS, concepts are easily structured to establish hierarchical (broader and narrower), associative (related), and equivalence (exact, close, broad, narrow) relationships to ultimately shape a thesaurus. This structured simplicity reduces complexity, making data easier for both humans to curate and machines to interpret.

Tell Me Why I Need A Thesaurus

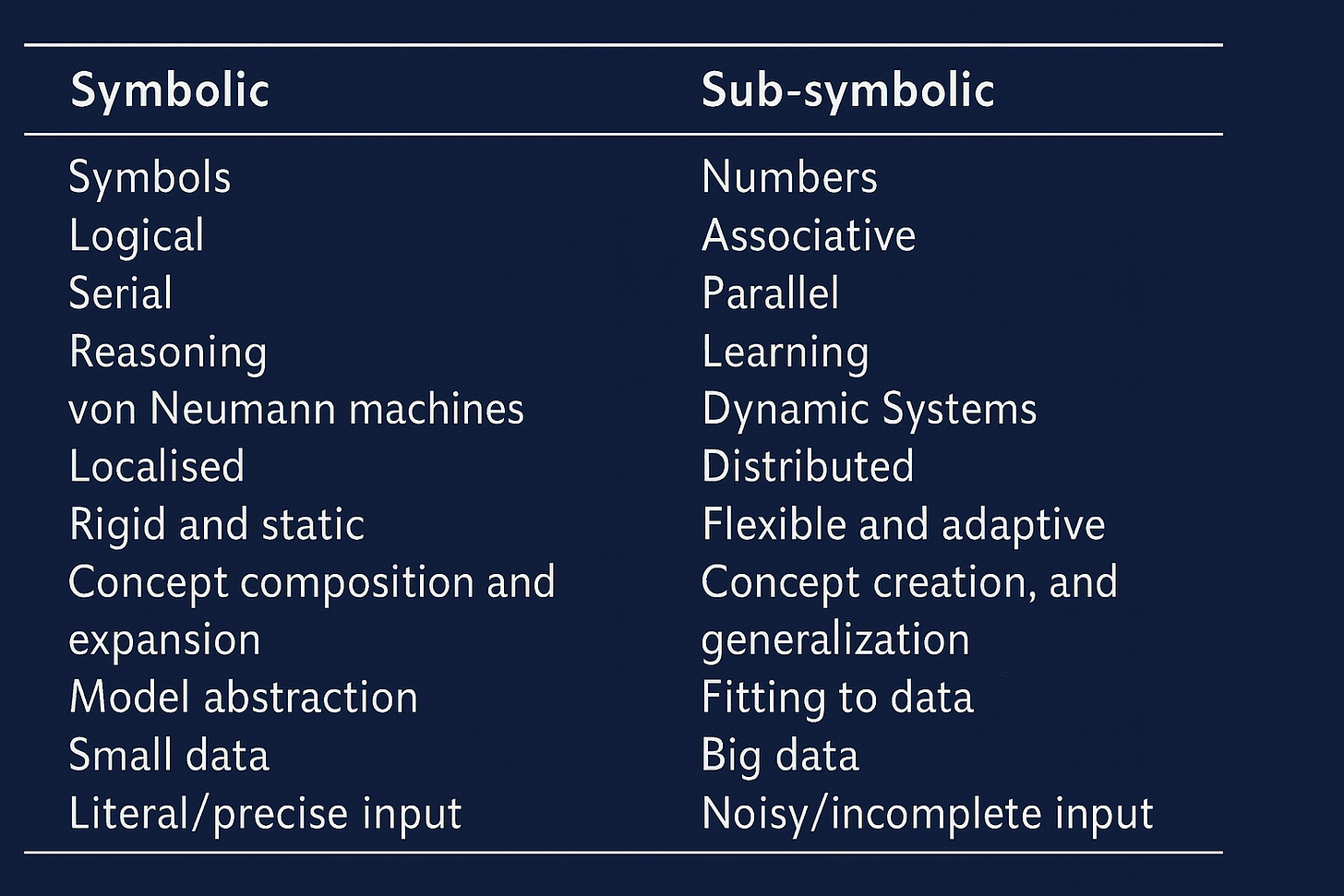

A thesaurus is a workhorse, beneficial for information retrieval and discovery systems, and exponentially endurant when it comes to symbolic AI. Symbolic AI, sometimes called Good Old‑Fashioned AI or GOFAI, is one of the earliest paradigms in artificial intelligence. Current commercial AI systems such as Open AI’s Chat GPT, is a deep‑learning, generative AI system—more specifically, an autoregressive large language model built on the transformer architecture. In contrast with symbolic AI, ChatGPT does not rely on hand‑crafted rules or explicit knowledge bases. Instead, it harnesses statistical patterns in data to produce human‑like text, making it highly flexible but also less inherently transparent than a rule‑based system.

Emerging AI research is discovering that symbolic AI is needed to drive accurate reliable AI results and even more critically, to bolster AI’s natural language conversational elements. The thing is, a thesaurus structured using an ontology like SKOS is technically a form of symbolic AI and a fundamental, machine readable representation of knowledge. As illuminated by Gary Marcus in his paper, The Next Decade in AI, Four Steps to Robust Artificial Intelligence “To build a robust, knowledge-driven approach to AI we must have the machinery of symbol-manipulation in our toolkit. Too much of useful knowledge is abstract to make do without tools that represent and manipulate abstraction, and to date, the only machinery that we know of that can symbol-manipulation”.

Explicit Concept Hierarchies and Relations

A thesaurus modeled using the SKOS ontology makes taxonomic relations, such as skos:broader and skos:narrower, first-class citizens in a machine-readable format. By modeling concepts as URIs and linking concepts with properties, a reasoner can traverse the hierarchy, infer implicit broader/narrower links (e.g., transitive closures), and classify new instances under the correct concepts. This explicit structure is essential for symbolic AI systems that rely on rule-based classification and inheritance of properties.

Lightweight Inference Support

Although a SKOS thesaurus is less expressive than an ontology modeled using Web Ontology Language or OWL, SKOS defines a core subset of semantics that many RDF and OWL reasoners can process out-of-the-box. For example, a SKOS‐aware reasoner can automatically infer that if Concept A is a skos:broader of Concept B, and Concept B is a skos:broader of Concept C, then Concept A is also a broader concept of Concept C. This kind of lightweight inferencing accelerates symbolic rule engines, enabling efficient query answering and classification, over a large thesauri.

Synonym and Mapping Handling for Lexical Reasoning

SKOS provides dedicated properties for labels and mappings: skos:prefLabel, skos:altLabel, skos:hiddenLabel, as well as mapping relations like skos:exactMatch and skos:closeMatch. Symbolic AI systems leverage these to normalize synonyms, resolve co-references, and map between different vocabularies, which is crucial for tasks like semantic search, question answering, and rule-based natural language processing (NLP) pipelines. By interpreting these label relations symbolically, AI engines can reason about lexical variants and crosswalk disparate datasets without ambiguity.

Interoperability within Neuro-symbolic Architectures

In neuro-symbolic AI, where symbolic reasoning is combined with neural networks, a SKOS thesaurus serves as a backbone for the symbolic layer. It anchors learned embeddings to well-defined concepts, enabling hybrid systems to both learn from data and apply logical rules consistently.

A knowledge graph built with SKOS as a foundational framework provides symbolic modules and elements. The SKOS thesaurus enforces domain constraints, performs logical deduction, and generates explanations, thereby enhancing both interpretability and robustness of AI reasoning. In other words, a SKOS thesaurus can shine some light into the black box that is AI.

SKOS’s formal yet lightweight thesaurus structure provides the scaffolding for symbolic knowledge representation, inference, and interoperability—core capabilities that power logical reasoning for AI systems.

Not All Ontologies Are Created Equal

But wait––isn’t there more to ontologies than SKOS?

There are two main types of ontologies: lightweight ontologies designed to provide a standard, lightweight framework for expressing knowledge organization systems, and lower ontologies, also called domain, application, or heavyweight ontologies, used to describe more specific concepts, classes properties, and relations, tailored to subject areas or use cases.

SKOS is a W3C recommended lightweight upper ontology, designed to represent controlled vocabularies, taxonomies and thesauri. SKOS can be rendered in several machine readable formats: RDF, RDF/XML, JSON-LD, Turtle, N3 and N-Triples. In other words, SKOS’s encoding formats are standards-based, interoperable and machine readable.

Conclusion

Modeling with SKOS first, ensures that the basics are correct and validated before adding the complexity that comes with heavier ontologies. SKOS saves time and effort longterm, by creating a reliable foundation for deeper, domain-specific ontologies when (and only when) they are justified by real requirements. I promise you, a SKOS thesaurus will deliver immense value, with or without AI. And with SKOS, you have built a knowledge graph, or what I like to call “knowledge graph lite”.

Nice post! As a nuance, SKOS doesn't declare skos:broader as transitive so as to be robust to "dirty" thesauri. Instead they introduced a super-property (skos:broaderTransitive) for doing the inference automatically: https://www.w3.org/TR/skos-primer/#sectransitivebroader .

But for good thesauri, it is usually fair to think of skos:broader as transitive.

Hey there. Masterful flow here. I'm a bit jealy of your finding a new voice for these ageless practices. We all need to hear it to breath life back into old science.