Why AI Isn't Autonomous (Yet), Part III

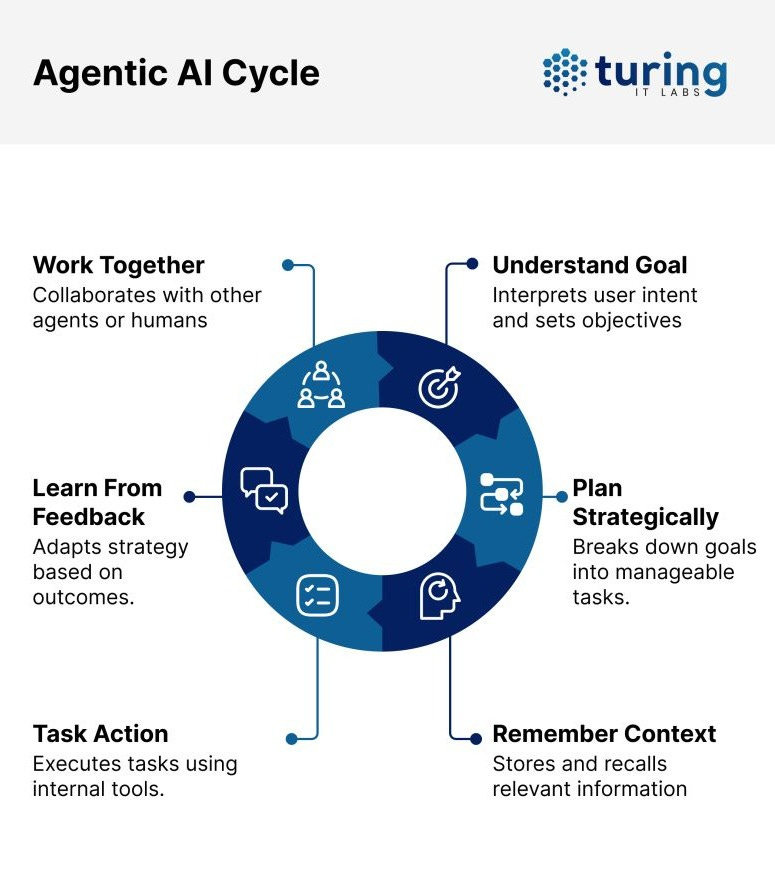

What Agentic AI Needs

Introduction

Agentic AI becomes possible only after a true knowledge structure is in place. That’s because agentic AI doesn’t just correlate patterns in data— it must interpret, reason over, and act upon structured representations of the world. Without structured knowledge, data-driven models generate high dimensional correlations, not reliable or verifiable results. To build autonomous, goal-driven, agentic AI at scale, we must move beyond a data only approach.

Raw data is only one ingredient and lexical, semantic labels will not suffice. Agentic AI requires that we move beyond a data–only approach, in order to harness structured information and manifest machine-actionable knowledge. Without a holistic knowledge infrastructure, AI cannot reliably reason, explain its decisions, or adapt to new scenarios. It remains stuck in pattern recognition, unable to act with true autonomy.

Data vs. Information vs. Knowledge

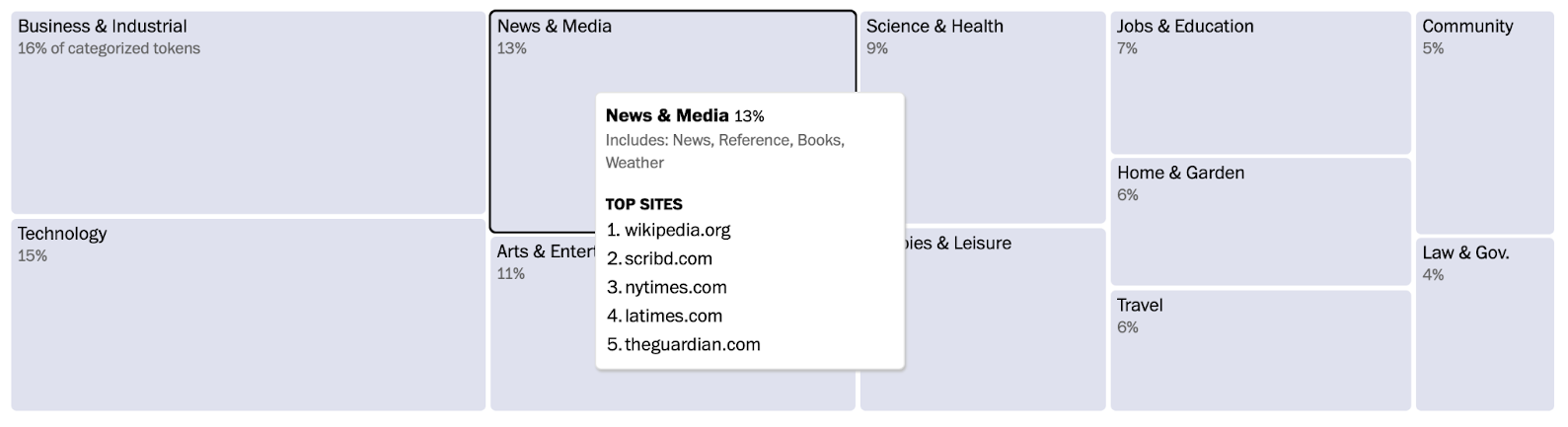

The knowledge pyramid, also known as the data–information–knowledge–wisdom pyramid, (DIKW hierarchy) is a classic and popular reference model, often cited as an indicator for levels of organizational maturity in data management and data governance endeavors. The exact origin of the DIKW pyramid is uncertain although most scholars agree that it was officially rooted in Russell Ackoff’s 1989 paper, “From Data to Wisdom”. Without getting hung up on the history of the DIKW framework, it is critical to acknowledge that the DIKW hierarchy applies to both humans and digital systems, to represent the emergence of intelligence.

And yes, I said it. There is no intelligence without knowledge. If we can agree that knowledge is a base requirement for intelligence and intelligent systems, then the DIKW hierarchy should exist as the agentic AI mantra.

All elements within the hierarchy are equally important, each contributing as parts of a whole system, designed to lend content and meaning to information retrieval tasks and knowledge acquisition.

The Basic Recipe for Knowledge

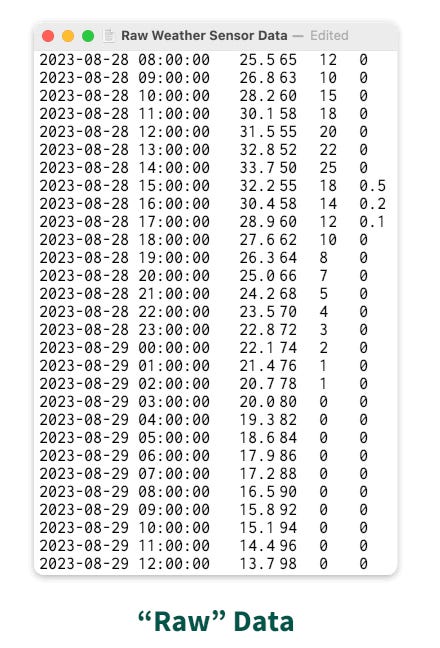

To better understand the knowledge-making process, let’s talk about definitions. Beginning with data, data are raw, unprocessed facts or measurements. Examples include sensor readings, click logs, transaction records, survey statistics. Data accounts for the raw input, that may or may not have meaning as individual units of datum.

Information emerges when data are structured and contextualized: trends over time, threshold alerts, annotated records. Information is the assemblance of data to create meaningful output.

Knowledge embeds information within formal models— ontologies, taxonomies, rules and schemas— that people or machines can interrogate and reason over. Knowledge is able to synthesize data and information, using each component to construct cohesive statements, combining at least two different pieces of information to assert true and meaningful observations, ideas or statements of fact.

What About Wisdom?

You may wonder why I have not offered the stage to Wisdom? Wisdom requires the combination of knowledge, experience and insight, which inherently calls upon intelligence but is not, in itself, knowledge or intelligence. In her 1982 paper, Wisdom and Intelligence: The Nature and Function of Knowledge In the Later Years, Vivian Clayton defines wisdom as “the ability to grasp human nature, which is paradoxical, contradictory, and subject to continual change.” She goes on to posit that “the function of wisdom Is characterized as provoking the individual to consider the consequences of his actions both to self and their effects on others. Wisdom, therefore, evokes questions of should one pursue a particular course of action.” (Clayton, 1982)

Wisdom, at this point in the trajectory of research and development in the domain of cognitive science, is inextricably, a human endeavor. Therefore I intentionally reserve wisdom for the human end user, the person who currently holds the capacity for developing wisdom, and the customer persona for AI systems. Taste, intuition and judgement can be thought of as flavors of wisdom. Perhaps agentic AI seeks to become wise, if we adhere to Clayton’s definition of wisdom.

One could argue that a well constructed, autonomous AI system seeks to consider consequences of actions and does, in fact, include reasoning frameworks to grapple with courses of action. However, an autonomous system would have to maintain complex networks to truly grasp the ever evolving states of human nature. Given that AI still struggles with contextual understanding, I believe it is safe to say that AI has not reached wisdom and still struggles to embody knowledge.

The Name of the Game is Intelligence

Ultimately, the DIKW hierarchy seeks to establish a framework for human intelligence, which has been extended to define machine intelligence. If the name of the game is artificial intelligence, we best start working on how to manage information and knowledge, not just data.

In his book, The Birth of Intelligence, Daeyeol Lee defines intelligence as “the ability to solve complex problems or make decisions with outcomes benefiting the actor, and has evolved in lifeforms to adapt to diverse environments for their survival and reproduction. For animals, problem-solving and decision-making are functions of their nervous systems, including the brain, so intelligence is closely related to the nervous system.” Intelligence includes the nervous system because senses, environmental feedback and perception are critical components of intelligence. For now, machine intelligence is stilldefined relative to human intelligence and can be understood as a surrogate for human intelligence.

If we are building intelligent autonomous systems, then shouldn't the systems include all of the base, required ingredients for intelligence?

The Limits of Data Only Approaches

Let’s dive into why data only approaches cannot support agentic AI. It’s easy to forget that data by itself carries no meaning. Streams of raw, syntactic tokens lack the shared definitions and concept mappings that give information its semantic grounding, leaving AI systems adrift, unable to identify entities reliably, adapt to unfamiliar contexts, or explain their choices.

Without formal ontologies and knowledge graphs to encode classes, relationships, hierarchies, and logical rules, models tend to overfit narrow domains, stumble when confronted with novel situations, obscure their decision making behind inscrutable “black boxes,” and waste colossal compute effort relearning facts that could have been explicitly encoded. In short, data-only approaches are brittle, opaque, and inefficient because true intelligence requires more than patterns in numbers.

Lack of Semantic Grounding

Because raw and syntactic data streams contain no built-in meanings, shared symbols or concept definitions, there is no semantic grounding. Without a formal semantic framework (a structured grounding between symbols and real-world referents), agents cannot reliably identify entities or define their relationships. The result? Brittle, narrow, domain-specific behaviors, with little capacity for reasoning or adaptation.