Knowledge Graph Lite, Part III

The Mighty Thesaurus, SKOS in Action

This article is the final installment of a three-part series. Part I can be found here and Part II can be found here.

Introduction

We've covered the why and the what—now it's time for the how. In this final installment, we're rolling up our sleeves to model a complete SKOS ontology for the domain of Artificial Intelligence.

We'll journey from a controlled vocabulary through a taxonomy to a rich thesaurus, in order, utilizing every SKOS class, property and relation along the way. We will explore how URIs (Uniform Resource Identifiers) transform our knowledge model from a simple vocabulary into a globally interoperable knowledge graph that supports entity reconciliation, category discovery, and seamless integration with broader semantic systems. Consider this your practical workshop for building a “knowledge graph lite”, that can power RAG systems, improve training data, and bring semantic clarity to AI applications.

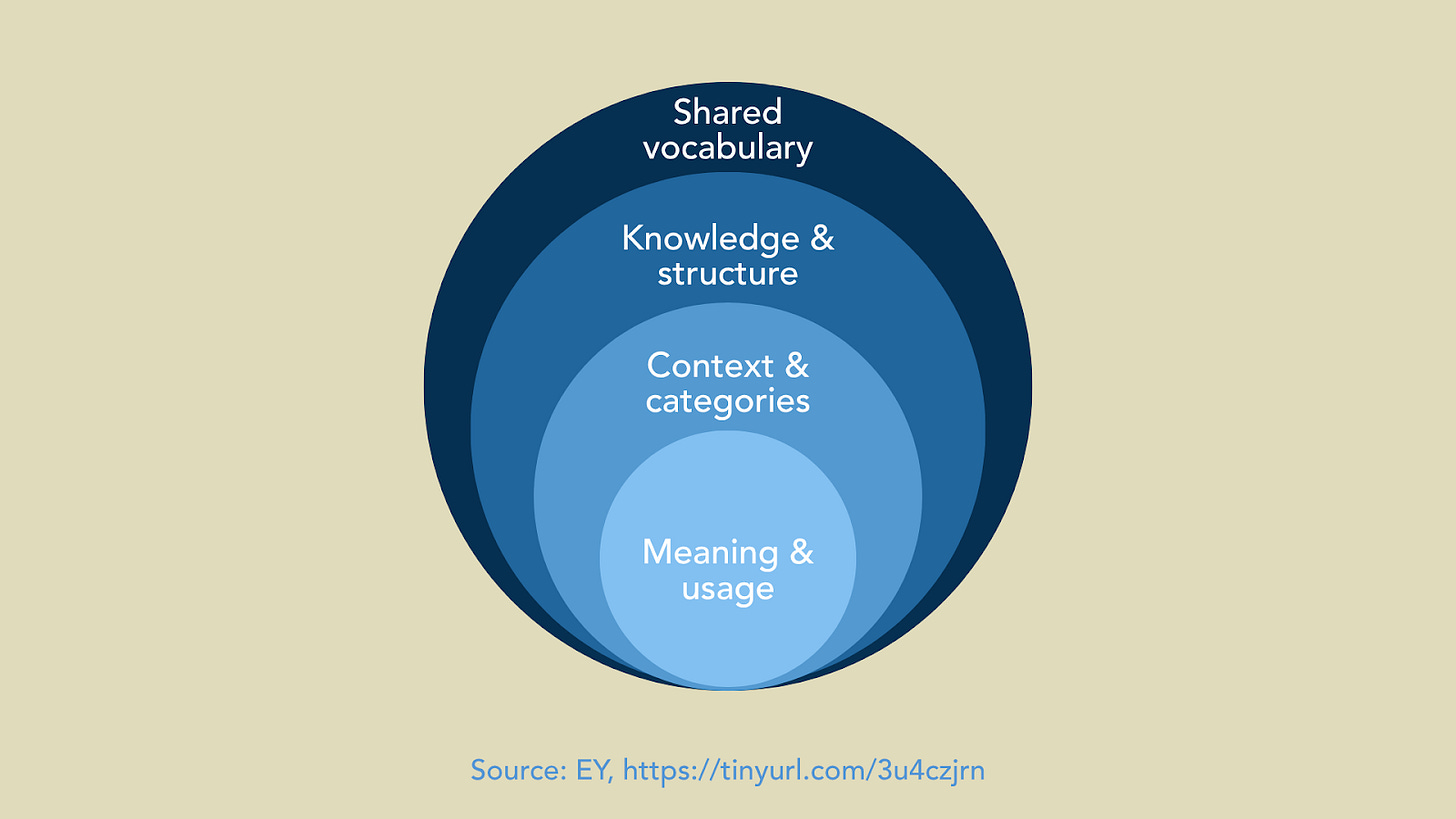

The Journey: From Controlled Vocabulary to Thesaurus

Let's model the AI domain step by step, starting with our candidate concepts and progressing through increasingly sophisticated structures. I'll show you how each stage builds upon the last, and how every SKOS element contributes to creating a machine-readable knowledge foundation.

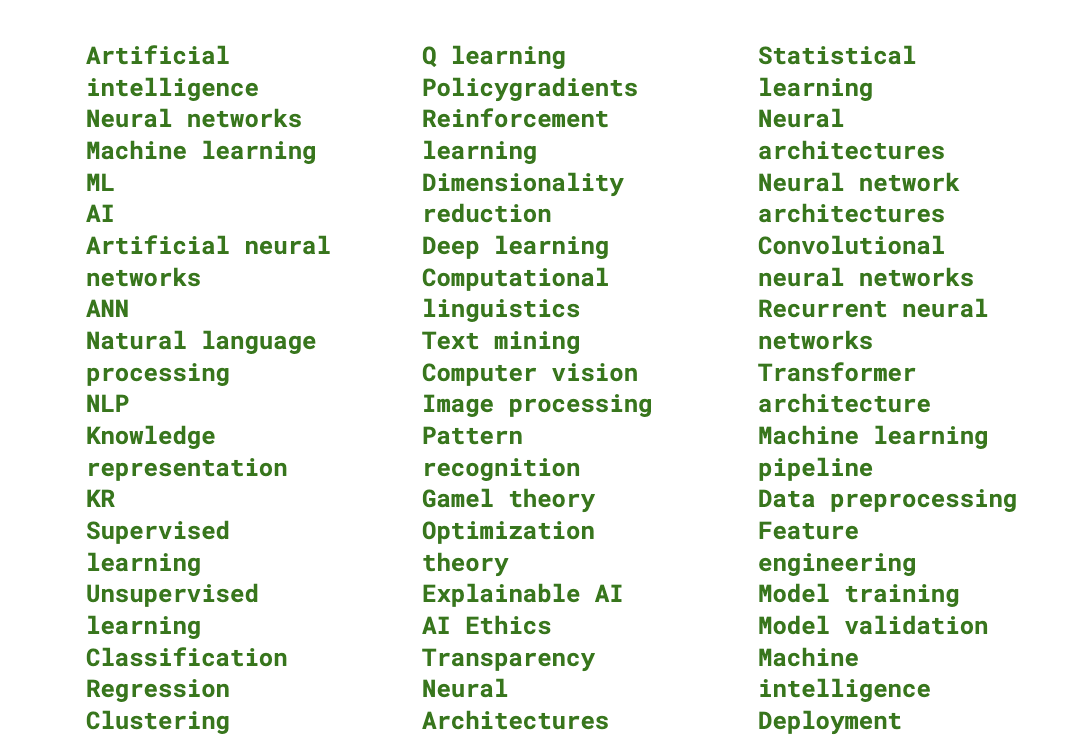

Our selected terms include:

These terms were derived from the open taxonomies that describe the artificial intelligence domain from varying, diverse perspectives. When I build information and knowledge structures, I always conduct thorough research, so as to arrive at a balanced collection of terms that introduce a broad array of vocabularies.

To collect terms from these domain taxonomies, I conduct category analysis, a process by which I use clustering to group concepts by theme and similarity, as best as possible. I consider the source domains of said vocabularies, so that I may consider the use cases and lenses applied to each specific vocabulary, so I may note biases or use-case specific terms. I also define terms according to the open world (say, the internet and external customers) and closed world (domain or organization-centric) vocabularies. The collection of terms and analysis is key to building robust and balanced knowledge representations, especially when we consider that AI has trained on the wild, open internet.

The source taxonomies and vocabularies are linked at the end of this article.

A Word About URIs

Before we dive into modeling, let's talk about what SKOS and ontologies, in general, brings to the table: every concept gets a unique, dereferenceable URI. This isn't just technical pedantry, it's the foundation that enables global knowledge interoperability. When I assign https://example.org/ai-ontology#Machinelearning as the URI for our Machine learning concept, I'm not just creating a unique identifier. I'm creating a globally addressable resource that can be referenced, linked to, and reasoned about by any system anywhere.

This URI-based approach is what transforms a simple controlled vocabulary into a node in the global knowledge graph. URIs solve the fundamental problem of entity ambiguity, that plagues AI systems. When your RAG system encounters "ML" in a document, how does it know whether we mean Machine learning, Maximum likelihood, or Medieval Latin? URIs provide unambiguous identity. The concept ai:Machinelearning is definitively distinct from stats:Maximumlikelihood or lang:MedievalLatin, even if they share the same acronym.

This baseline understanding is critical to understanding how ontologies and ontological logic works. One URI is used to capture all facets of a single concept, that may one day grow up to become part of a more complex ontology. This type of concept representation is a dynamic way to collapse verbose vocabularies, while also handling entity reconciliation. Simply put, we are working towards building a context-rich ecosystem that speaks the language of a language model.

Now let’s get on with modeling.

Stage 1: Controlled Vocabulary - The Foundation

Our controlled vocabulary establishes the basic concepts for the AI domain. Here we define our core terms and create our first SKOS Concept Scheme:

@prefix skos: <http://www.w3.org/2004/02/skos/core#> .

@prefix skosxl: <http://www.w3.org/2008/05/skos-xl#> .

@prefix ai: <http://example.org/ai-ontology#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

Our Concept Scheme

ai:AIConceptScheme rdf:type skos:ConceptScheme ;

skos:prefLabel "Artificial Intelligence Concept Scheme"@en ;

skos:definition "A controlled vocabulary for artificial intelligence concepts and terminology"@en ;

skos:creator "AI Knowledge Systems" ;

skos:created "2025-01-01" .

Core AI Concepts

ai:Machinelearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Machine learning"@en ;

skos:altLabel "ML"@en ;

skos:definition "A subset of AI that enables systems to learn from data"@en ;

skos:scopeNote "Includes supervised, unsupervised, and reinforcement learning"@en .

ai:Neuralnetworks rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Neural networks"@en ;

skos:altLabel "Artificial neural networks"@en ;

skos:altLabel "ANN"@en ;

skos:definition "Computing systems inspired by biological neural networks"@en .

ai:Naturallanguageprocessing rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Natural language processing"@en ;

skos:altLabel "NLP"@en

skos:definition "AI field focused on interaction between computers and human language"@en . Here we see SKOS in its simplest form. Each skos:Concept belongs to our skos:ConceptScheme, has a preferred label (skos:prefLabel), alternative labels (skos:altLabel) for synonyms and acronyms, and clear definitions. Notice how we're already handling the ambiguity problem I mentioned in Part II—"ML" could mean Machine Learning or Maximum Likelihood, but our SKOS model makes the context explicit, with definitions.

Stage 2: Taxonomy - Building Hierarchy

Now we introduce hierarchical relationships using skos:broader and skos:narrower to create our three-level AI taxonomy. Each code block represents a level of the hierarchy, explicit.

Level 1: Top-level AI domains

ai:Artificialintelligence rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:topConceptOf ai:AIConceptScheme ;

skos:prefLabel "Artificial intelligence"@en ;

skos:altLabel "AI"@en ;

skos:definition "Computer systems able to perform tasks that typically require human intelligence"@en .

ai:Machinelearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:topConceptOf ai:AIConceptScheme ;

skos:prefLabel "Machine learning"@en ;

skos:altLabel "ML"@en ;

skos:definition "Systems that learn from data without being explicitly programmed"@en .

ai:Knowledgerepresentation rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:topConceptOf ai:AIConceptScheme ;

skos:prefLabel "Knowledge representation"@en ;

skos:altLabel "KR"@en ;

skos:definition "Methods for encoding knowledge for AI systems"@en .Level 2: Major subcategories

ai:Supervisedlearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Supervised learning"@en ;

skos:definition "Learning with labeled training data"@en ;

skos:broader ai:Machinelearning ;

skos:narrower ai:Classification, ai:Regression .

ai:Unsupervisedlearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Unsupervised learning"@en ;

skos:definition "Learning patterns in data without labeled examples"@en ;

skos:broader ai:Machinelearning ;

skos:narrower ai:Clustering, ai:Dimensionalityreduction .

ai:Reinforcementlearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Reinforcement learning"@en ;

skos:altLabel "RL"@en ;

skos:definition "Learning through interaction with environment via rewards and penalties"@en ;

skos:broader ai:Machinelearning ;

skos:narrower ai:Qlearning, ai:Policygradients .Level 3: Specific techniques and applications

ai:Classification rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Classification"@en ;

skos:definition "Predicting discrete categories or classes"@en ;

skos:broader ai:Supervisedlearning ;

skos:example "Email spam detection, image recognition"@en .

ai:Regression rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Regression"@en ;

skos:definition "Predicting continuous numerical values"@en ;

skos:broader ai:Supervisedlearning ;

skos:example "Stock price prediction, temperature forecasting"@en .

ai:Clustering rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Clustering"@en ;

skos:definition "Grouping similar data points without labeled examples"@en ;

skos:broader ai:Unsupervisedlearning ;

skos:example "Customer segmentation, gene sequencing"@en . Notice how we use skos:topConceptOf to mark our highest level concepts, and the skos:broader/skos:narrower relationships to create our taxonomic structure. The skos:example property provides concrete use cases—this is gold for RAG systems that need to understand when to apply specific concepts.

Keep reading with a 7-day free trial

Subscribe to Intentional Arrangement to keep reading this post and get 7 days of free access to the full post archives.