This article is the final installment of a three-part series. Part I can be found here and Part II can be found here.

Introduction

We've covered the why and the what—now it's time for the how. In this final installment, we're rolling up our sleeves to model a complete SKOS ontology for the domain of Artificial Intelligence.

We'll journey from a controlled vocabulary through a taxonomy to a rich thesaurus, in order, utilizing every SKOS class, property and relation along the way. We will explore how URIs (Uniform Resource Identifiers) transform our knowledge model from a simple vocabulary into a globally interoperable knowledge graph that supports entity reconciliation, category discovery, and seamless integration with broader semantic systems. Consider this your practical workshop for building a “knowledge graph lite”, that can power RAG systems, improve training data, and bring semantic clarity to AI applications.

The Journey: From Controlled Vocabulary to Thesaurus

Let's model the AI domain step by step, starting with our candidate concepts and progressing through increasingly sophisticated structures. I'll show you how each stage builds upon the last, and how every SKOS element contributes to creating a machine-readable knowledge foundation.

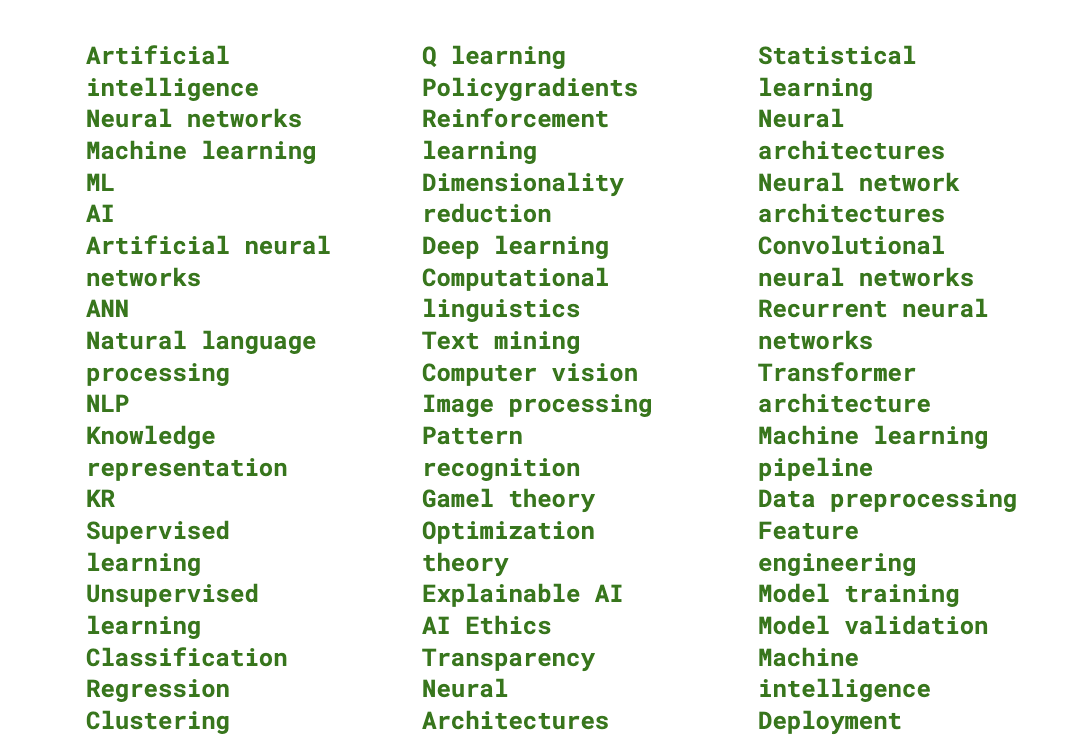

Our selected terms include:

These terms were derived from the open taxonomies that describe the artificial intelligence domain from varying, diverse perspectives. When I build information and knowledge structures, I always conduct thorough research, so as to arrive at a balanced collection of terms that introduce a broad array of vocabularies.

To collect terms from these domain taxonomies, I conduct category analysis, a process by which I use clustering to group concepts by theme and similarity, as best as possible. I consider the source domains of said vocabularies, so that I may consider the use cases and lenses applied to each specific vocabulary, so I may note biases or use-case specific terms. I also define terms according to the open world (say, the internet and external customers) and closed world (domain or organization-centric) vocabularies. The collection of terms and analysis is key to building robust and balanced knowledge representations, especially when we consider that AI has trained on the wild, open internet.

The source taxonomies and vocabularies are linked at the end of this article.

A Word About URIs

Before we dive into modeling, let's talk about what SKOS and ontologies, in general, brings to the table: every concept gets a unique, dereferenceable URI. This isn't just technical pedantry, it's the foundation that enables global knowledge interoperability. When I assign https://example.org/ai-ontology#Machinelearning as the URI for our Machine learning concept, I'm not just creating a unique identifier. I'm creating a globally addressable resource that can be referenced, linked to, and reasoned about by any system anywhere.

This URI-based approach is what transforms a simple controlled vocabulary into a node in the global knowledge graph. URIs solve the fundamental problem of entity ambiguity, that plagues AI systems. When your RAG system encounters "ML" in a document, how does it know whether we mean Machine learning, Maximum likelihood, or Medieval Latin? URIs provide unambiguous identity. The concept ai:Machinelearning is definitively distinct from stats:Maximumlikelihood or lang:MedievalLatin, even if they share the same acronym.

This baseline understanding is critical to understanding how ontologies and ontological logic works. One URI is used to capture all facets of a single concept, that may one day grow up to become part of a more complex ontology. This type of concept representation is a dynamic way to collapse verbose vocabularies, while also handling entity reconciliation. Simply put, we are working towards building a context-rich ecosystem that speaks the language of a language model.

Now let’s get on with modeling.

Stage 1: Controlled Vocabulary - The Foundation

Our controlled vocabulary establishes the basic concepts for the AI domain. Here we define our core terms and create our first SKOS Concept Scheme:

@prefix skos: <http://www.w3.org/2004/02/skos/core#> .

@prefix skosxl: <http://www.w3.org/2008/05/skos-xl#> .

@prefix ai: <http://example.org/ai-ontology#> .

@prefix rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#> .

@prefix rdfs: <http://www.w3.org/2000/01/rdf-schema#> .

Our Concept Scheme

ai:AIConceptScheme rdf:type skos:ConceptScheme ;

skos:prefLabel "Artificial Intelligence Concept Scheme"@en ;

skos:definition "A controlled vocabulary for artificial intelligence concepts and terminology"@en ;

skos:creator "AI Knowledge Systems" ;

skos:created "2025-01-01" .

Core AI Concepts

ai:Machinelearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Machine learning"@en ;

skos:altLabel "ML"@en ;

skos:definition "A subset of AI that enables systems to learn from data"@en ;

skos:scopeNote "Includes supervised, unsupervised, and reinforcement learning"@en .

ai:Neuralnetworks rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Neural networks"@en ;

skos:altLabel "Artificial neural networks"@en ;

skos:altLabel "ANN"@en ;

skos:definition "Computing systems inspired by biological neural networks"@en .

ai:Naturallanguageprocessing rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Natural language processing"@en ;

skos:altLabel "NLP"@en

skos:definition "AI field focused on interaction between computers and human language"@en . Here we see SKOS in its simplest form. Each skos:Concept belongs to our skos:ConceptScheme, has a preferred label (skos:prefLabel), alternative labels (skos:altLabel) for synonyms and acronyms, and clear definitions. Notice how we're already handling the ambiguity problem I mentioned in Part II—"ML" could mean Machine Learning or Maximum Likelihood, but our SKOS model makes the context explicit, with definitions.

Stage 2: Taxonomy - Building Hierarchy

Now we introduce hierarchical relationships using skos:broader and skos:narrower to create our three-level AI taxonomy. Each code block represents a level of the hierarchy, explicit.

Level 1: Top-level AI domains

ai:Artificialintelligence rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:topConceptOf ai:AIConceptScheme ;

skos:prefLabel "Artificial intelligence"@en ;

skos:altLabel "AI"@en ;

skos:definition "Computer systems able to perform tasks that typically require human intelligence"@en .

ai:Machinelearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:topConceptOf ai:AIConceptScheme ;

skos:prefLabel "Machine learning"@en ;

skos:altLabel "ML"@en ;

skos:definition "Systems that learn from data without being explicitly programmed"@en .

ai:Knowledgerepresentation rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:topConceptOf ai:AIConceptScheme ;

skos:prefLabel "Knowledge representation"@en ;

skos:altLabel "KR"@en ;

skos:definition "Methods for encoding knowledge for AI systems"@en .Level 2: Major subcategories

ai:Supervisedlearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Supervised learning"@en ;

skos:definition "Learning with labeled training data"@en ;

skos:broader ai:Machinelearning ;

skos:narrower ai:Classification, ai:Regression .

ai:Unsupervisedlearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Unsupervised learning"@en ;

skos:definition "Learning patterns in data without labeled examples"@en ;

skos:broader ai:Machinelearning ;

skos:narrower ai:Clustering, ai:Dimensionalityreduction .

ai:Reinforcementlearning rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Reinforcement learning"@en ;

skos:altLabel "RL"@en ;

skos:definition "Learning through interaction with environment via rewards and penalties"@en ;

skos:broader ai:Machinelearning ;

skos:narrower ai:Qlearning, ai:Policygradients .Level 3: Specific techniques and applications

ai:Classification rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Classification"@en ;

skos:definition "Predicting discrete categories or classes"@en ;

skos:broader ai:Supervisedlearning ;

skos:example "Email spam detection, image recognition"@en .

ai:Regression rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Regression"@en ;

skos:definition "Predicting continuous numerical values"@en ;

skos:broader ai:Supervisedlearning ;

skos:example "Stock price prediction, temperature forecasting"@en .

ai:Clustering rdf:type skos:Concept ;

skos:inScheme ai:AIConceptScheme ;

skos:prefLabel "Clustering"@en ;

skos:definition "Grouping similar data points without labeled examples"@en ;

skos:broader ai:Unsupervisedlearning ;

skos:example "Customer segmentation, gene sequencing"@en . Notice how we use skos:topConceptOf to mark our highest level concepts, and the skos:broader/skos:narrower relationships to create our taxonomic structure. The skos:example property provides concrete use cases—this is gold for RAG systems that need to understand when to apply specific concepts.

Stage 3: Thesaurus - Adding Semantic Richness

Now we transform our taxonomy into a full thesaurus by introducing associative relationships with skos:related and exploring SKOS-XL for complex label management:

Adding related (associative) relationships

ai:Neuralnetworks skos:related ai:Deeplearning, ai:Machinelearning .

ai:Naturallanguageprocessing skos:related ai:Computationallinguistics, ai:Textmining .

ai:Computervision skos:related ai:Imageprocessing, ai:Patternrecognition . Cross-domain relationships

ai:Reinforcementlearning skos:related ai:Gametheory, ai:Optimizationtheory .

ai:ExplainableAI skos:related ai:AIethics, ai:Transparency .SKOS-XL for complex label management

ai:Machinelearninglabel rdf:type skosxl:Label ;

skosxl:literalForm "Machine learning"@en ;

skosxl:labelRelation ai:Statisticallearninglabel .

ai:Statisticallearninglabel rdf:type skosxl:Label ;

skosxl:literalForm "Statistical learning"@en .

ai:Machinelearning skosxl:prefLabel ai:Machinelearninglabel ;

skosxl:altLabel ai:Statisticallearninglabel .We can also leverage skos:Collection, which is a resource used to group skos:Concepts (or even other collections) in a non-hierarchical manner. It’s intended for display-oriented or associative groupings rather than semantic modeling.

There are two main types:

skos:Collection– A basic, unordered grouping.skos:OrderedCollection– A collection where the order of members is meaningful (usesrdf:Liststructure).

Collection for organizing related concepts

ai:Neuralarchitectures rdf:type skos:Collection ;

skos:prefLabel "Neural network architectures"@en ;

skos:member ai:Convolutionalneuralnetworks, ai:Recurrentneuralnetworks, ai:Transformerarchitecture .⚠️ What Collections Are Not For

Not a substitute for

skos:broader/skos:narrowerDoes not imply inferencing or transitivity

Not semantic hierarchy: they are for navigation and display only

SKOS Collections help organize and present concepts clearly, without distorting their semantic relationships.They are essential tools for information architects and vocabulary curators working in digital libraries, repositories, ontologies, and knowledge graphs. Collections are useful, to organize concepts into groupings, to account for future casting—that one day, some day, when more complex ontological modeling comes into the purview. For example, I can group concepts in groupings that define themes according to relationship types or certain descriptive attribute assignments.

Ordered Collection for process-oriented concepts

ai:MLpipeline rdf:type skos:OrderedCollection ;

skos:prefLabel "Machine learning pipeline"@en ;

skos:memberList (ai:Datapreprocessing ai:Featureengineering ai:Modeltraining ai:Modelvalidation ai:Deployment) .The skos:related relationships break us out of strict hierarchical thinking. Machine learning and Neural networks aren't in a parent-child relationship, but they're definitely associated. These relationships are crucial for AI applications. When a user asks about neural networks, the system knows to consider machine learning as an associated relation therefore, adding rich context.

SKOS-XL: When Labels Need Labels

SKOS-XL extends SKOS to handle complex labeling scenarios. Instead of simple string literals, SKOS-XL treats labels as first-class resources that can have their own properties and relationships:

Handling label extensions with SKOS-XL

ai:AILabel rdf:type skosxl:Label ;

skosxl:literalForm "Artificial intelligence"@en ;

skos:changeNote "Preferred term as of 2025, replacing 'Machine intelligence'"@en ;

skos:historyNote "Term coined by John McCarthy in 1956"@en .

ai:ArtificialIntelligence skosxl:prefLabel ai:AILabel . Handling acronym relationships

ai:NLPLabel rdf:type skosxl:Label ;

skosxl:literalForm "NLP"@en .

ai:Naturallanguageprocessinglabel rdf:type skosxl:Label ;

skosxl:literalForm "Natural language processing"@en .

ai:NLPLabel skosxl:labelRelation ai:Naturallanguageprocessinglabel .This is particularly powerful for AI systems dealing with evolving terminology. As AI advances rapidly, terms change meaning or fall out of favor. SKOS-XL lets us track these changes semantically.

Mapping Between Vocabularies: Mapping Properties

One of SKOS's most powerful features is its mapping properties, essential for interoperating between different AI vocabularies:

Mapping to external vocabularies

ai:MachinlLearning skos:exactMatch <http://dbpedia.org/resource/Machine_learning> ;

skos:closeMatch <https://wikidata.org/entity/Q2539> ;

skos:broadMatch <https://schema.org/ComputerScience> .

ai:Deeplearning skos:relatedMatch <http://dbpedia.org/resource/Deep_learning> .Notation for integration with classification systems

ai:Supervisedlearning skos:notation "AI.ML.SL" ;

skos:note "Internal classification code for supervised learning methods"@en .These mappings are game-changers for RAG systems that need to pull from multiple knowledge sources. Your SKOS vocabulary becomes a semantic bridge between AI knowledge repositories, ontologies knowledge graphs and structured data repositories.

You will note this link in the code block: http://dbpedia.org/resource/Machine_learning.

This URI or permalink brings us to DBpedia. Dbpedia is an ontology-rich knowledge base and knowledge graph that embeds ontology throughout their pages. Embedded within DBpedia, you will find discover a rich, linked data ecosystem, connecting resources and knowledge graphs such as Wikidata, Wikipedia, VIAF, and the Getty, to name a few. Without sliding down a semantic rabbit hole, a link structured using http NOT https, helps machines disambiguate, define and contextualize categories, by leveraging the ontologies embedded within these pages. Visit the DBpedia link to see Machine learning as defined by this ontology- rich page.

Why This Matters for AI Systems

Training Data Enhancement

A well-structured SKOS thesaurus can dramatically improve training data quality. Instead of raw text about "ML models," your training data can be enriched with explicit relationships: supervised learning ↔ classification ↔ pattern recognition. This semantic context helps models understand not just what terms mean, but how they relate.

RAG System Optimization

RAG systems benefit enormously from SKOS structure. When a user asks about "neural networks," the system can leverage skos:related relationships to pull relevant context about deep learning, machine learning, and specific architectures. The skos:broader and skos:narrower relationships help the system understand when to zoom in or out on concepts.

RIG (Retrieval Interleaved Generation) Support

RIG systems, which use retrieval to influence generation at multiple points throughout the model query and prompt journey, can use SKOS hierarchies to guide search and response strategies. The semantic relationships help determine which retrieved passages are most relevant and how they should influence the AI model’s response generation process.

Implementation in Practice

Here's how this SKOS model supports real AI applications:

Vector Database Integration: Use SKOS preferred labels and alternative labels to improve embedding quality. The semantic relationships guide similarity calculations—concepts with skos:related relationships should have higher similarity scores than those without. A SKOS structure can also serve as a category backbone for a vector database, thereby inserting a human-in-the-loop into the decisions regarding categories and hierarchy or tree structures. In other words, a SKOS structure can help guide vector categorization decisions, normally a decision that resides in the realm of machines.

Query Expansion: When users search for "AI ethics," expand to include related concepts like "explainable AI," "algorithmic bias," and "responsible AI" using the skos:related relationships. In addition, queries are supported by skos:altLabel and skos:hiddenLabel, unified by a single URI identifier that defines a single concept by not only the preferred label, but also by acronyms, synonyms and misspellings.

Concept Classification: Use the taxonomic structure to automatically classify new content. A document about "convolutional neural networks" can be automatically tagged with broader concepts like "deep learning" and "machine learning." Because SKOS supports simple yet powerful semantic relations and associations, inferencing should be leveraged when implementing automated concept classification logic. Visit Part I from this series, see lightweight inference support.

Knowledge Ecosystems Realized: The URI

Remember how each concept in our SKOS model has a URI? Our URI-based SKOS model now participates in a global knowledge ecosystem where:

Interoperability is built-in: Every concept can be precisely referenced and linked across systems and organizations.

Entity Reconciliation becomes semantic: Instead of fuzzy string matching, we have precise URI-based concept alignment.

Category Discovery operates through semantic networks: Related concepts are discovered through URI-based relationship traversal rather than keyword similarity.

Knowledge Integration happens automatically: Cross-vocabulary mappings enable seamless integration with authoritative knowledge sources.

Semantic Search leverages hierarchical relationships: Queries can be automatically expanded using broader, narrower, and related concept URIs.

Multilingual Support is inherent: The same concept URI can have labels in multiple languages, enabling cross-lingual knowledge access.

URIs and AI Systems

This URI-based approach transforms how AI systems interact with knowledge:

RAG Systems: Instead of retrieving documents based on keyword overlap, they retrieve based on semantic concept matching using URI relationships.

Training Data: Models learn not just from text, but from explicit semantic relationships between concepts, improving understanding and reducing hallucinations. When many labels share a single URI, your SKOS thesaurus model is perfectly positioned to serve as a primitive type of NLP.

Knowledge Integration: Different organizational AI systems can seamlessly share and build upon each other's knowledge models through URI-based concept alignment. Supported by mapping relations, of course.

Entity Recognition: Named entity recognition systems Named entity recognition systems (NER) can achieve much higher precision by linking entities to authoritative URI-based concepts rather than ambiguous text strings.

Question Answering: Systems can provide more accurate answers by understanding the precise semantic relationships between query concepts and knowledge base concepts. A step up from question-answer banks mapped to SQL queries.

The Full SKOS Toolkit in Action

Our AI domain model now utilizes virtually every SKOS element:

Concept Scheme: Our overall AI vocabulary container

Concepts: Individual AI terms and ideas

Labels: Preferred, alternative, and hidden labels for each concept

Hierarchical Relations: Broader/narrower relationships creating taxonomic structure

Associative Relations: Related connections that cross taxonomic boundaries

Mapping Relations: Connections to external vocabularies

Collections: Groupings of related concepts

Ordered Collections: Sequential concept arrangements

Documentation: Definitions, scope notes, examples, and change histories

https://www.w3.org/TR/skos-reference/

This isn't just academic modeling—it's a practical knowledge foundation that makes AI systems smarter, more reliable, and more transparent.

Looking Forward: Beyond SKOS

While SKOS provides an excellent foundation, remember that it's designed to be a stepping stone. As your AI domain knowledge matures, you might need to graduate to OWL for more complex logical relationships, or to integrate and support other ontological frameworks. But here's the catch: your SKOS foundation ensures that the transition will be smooth and your knowledge investment protected.

The knowledge graph you've built with SKOS isn't just "lite"—it's the essential scaffolding upon which robust AI applications stand. AI systems are only as good as their context, and SKOS gives you the tools to make that context explicit, machine-readable, and semantically rich.

Your journey from candidate concepts to a full thesaurus mirrors the evolution of AI itself: from simple rule-based systems to sophisticated, context-aware intelligence. And just like AI, your knowledge graph will continue to evolve, learn, and improve.

Open Taxonomies about Artificial Intelligence

Congress. gov Artificial Intelligence Taxonomy

Open Data Institute A Data for AI Taxonomy

MIT AI Risk Repository Taxonomy

UC Berkeley A Taxonomy for Trustworthiness for Artificial Intelligence

University of Southampton AI Taxonomy

US–EU Terminology and Taxonomy for Artificial Intelligence - Second Edition

Disclaimer: There are more nuances and specifics about SKOS modeling and ontology modeling. All information within does not fully describe all things SKOS or ontology. Any omission is due to conciseness.

This SKOS ontology stood out for its potential to build, evolve, maintain a “disambiguated” knowledge infrastructure “before” technology when the "ongoing objectives" are auditable, adaptable enterprise outcomes (Humans, AI). Thanks for series. A lot to think about.